Difference between revisions of "MPICH, DCMF, and SPI"

| (34 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | [[Testing]] | [[ZeptoOS_Documentation|Top]] | [[Kernel]] | |

| − | + | ---- | |

| − | |||

| − | |||

| − | |||

| − | + | ==Introduction== | |

| − | |||

| − | |||

| − | |||

| − | |||

| + | To support high performance computing (HPC) applications, specifically MPI applications, we have ported IBM's CNK communication software stack to the ZeptoOS compute node Linux environment. MPICH used in this ZeptoOS release is mpich2-1.0.7 with IBM patches. It is reasonably stable, and the performance of MPI applications on the ZeptoOS compute node Linux is comparable to that on CNK. While there are some limitations at the moment, there are benefits as well. | ||

| − | + | Benefits: | |

| + | * No limitation on the number of threads | ||

| + | ** 4 or more OpenMP threads per node | ||

| + | ** Additional threads as I/O or backgroup tasks | ||

| + | * It is Linux! | ||

| + | ** Debugging tools such as gdb, strace, etc | ||

| + | ** Various file systems, such as ramfs | ||

| − | + | Current limitations: | |

| − | + | * Only the SMP mode is supported | |

| − | + | * Shared libraries are not provided at the moment | |

| − | set of wrapper scripts that IBM provides | + | * No binary compatibility between CNK and ZeptoOS CN Linux MPI binaries |

| − | + | ||

| − | + | We will support a VN-equivalent mode (multiple MPI tasks per node) and provide shared libraries in a future release. | |

| + | |||

| + | As in IBM CNK environment, Deep Computing Messaging Framework (DCMF) and System Programming Interface (SPI) are available. It is possible to write a DCMF code or an SPI code directly if necessary. DCMF is a communication library that provides non-blocking operations. Please refer to the [http://dcmf.anl-external.org/wiki/index.php/Main_Page DCMF wiki] for details. We are using DCMF version 1.0.0 in the current ZeptoOS release, which is older than the DCMF in the current driver release (V1R3M0). SPI is the lowest-level user-space API for the torus DMA, collective network, BGP-specifc lock mechanisms, and other compute node specific features. There is no public document on SPI available at the moment, but almost all header files and source code are available. Internally, MPICH depends on DMCF, which in turn depends on SPI. We will say more about it [[#Software stack layout|later]]. | ||

| + | |||

| + | ===ZCB and Big memory=== | ||

| + | |||

| + | MPI applications running under the ZeptoOS compute node Linux environment (technically, applications that require the DMA operation or a maximum memory bandwidth) need to be configured as Zepto Compute Binaries (ZCB). This is done using the <tt>zelftool</tt>, which is invoked behind the scenes when linking a binary using the ZeptoOS MPI compiler wrapper scripts (<tt>zmpicc</tt>, etc). | ||

| + | |||

| + | ZeptoOS compute node kernel treats ZCB executables differently from ordinary processes. It creates a special memory mapping region called big memory, which is covered by large pages with semi-static TLB entries, and it loads all application sections to the big memory region. Big memory region has virtually no TLB misses and it also enables DMA operations. | ||

| + | |||

| + | Some system calls will not work correctly if used from a ZCB process, in particular <tt>fork</tt> (but creating threads ''does'' work). Also, being a separate memory region set up at kernel boot time, the size of big memory is fixed. It is set to 256 MB by default, which could be too small for larger MPI processes; it can be [[FAQ#Why large MPI processes do not work|increased]] before booting a partition, at the expense of the ordinary Linux paged memory. | ||

| + | |||

| + | ==Compiling HPC applications== | ||

| + | |||

| + | While the same compiler can be used as for the applications running under the IBM CNK, ZeptoOS compute node environment requires linking with ZeptoOS-specific communication libraries (applications linked with the CNK MPI will not work on ZeptoOS). | ||

| + | |||

| + | ===Compiler wrapper scripts=== | ||

| + | |||

| + | We provide compiler wrapper scripts which automatically link with appropriate libraries from the ZeptoOS installation directory. We provide the same set of wrapper scripts that IBM provides, with an extra <tt>z</tt> prefix: | ||

| + | |||

| + | ; zmpicc, zmpicxx, zmpif77, zmpif90 | ||

| + | : Wrapper scripts that invoke BGP-enhanced GNU compilers | ||

| + | |||

| + | ; zmpixlc, zmpixlcxx, zmpixlf2003, zmpixlf77, zmpixlf90, zmpixlf95 | ||

| + | : Wrapper scripts that invoke IBM XL compilers | ||

| + | |||

| + | ; zmpixlc_r, zmpixlcxx_r, zmpixlf2003_r, zmpixlf77_r, zmpixlf90_r, zmpixlf95_r | ||

| + | : Wrapper scripts that invoke IBM XL compilers (thread safe compilation for OpenMP) | ||

| + | |||

| + | To get insight into the internals of these scripts, invoke them with the <tt>-show</tt> option. | ||

| + | |||

| + | ====A compilation example==== | ||

| + | |||

| + | There is nothing special about compiling a program for ZeptoOS. Here is a real-world example of how to build a well-known [http://climate.lanl.gov/Models/POP/ Parallel Ocean Program (POP)]. | ||

<pre> | <pre> | ||

| − | - | + | $ wget http://climate.lanl.gov/Models/POP/POP_2.0.1.tar.Z |

| − | + | $ tar xvfz POP_2.0.1.tar.Z && cd pop | |

| − | + | $ ./setup_run_dir ztest && cd ztest | |

| − | + | $ edit ibm_mpi.gnu # see the patch below | |

| − | + | $ export ARCHDIR=ibm_mpi | |

| + | $ make # takes a while | ||

| + | $ edit pop_in # test data set | ||

| + | - nprocs_clinic = 4 | ||

| + | - nprocs_tropic = 4 | ||

| + | + nprocs_clinic = 64 | ||

| + | + nprocs_tropic = 64 | ||

| + | $ cqsub -n 64 -t 10 -k <zepto_profile> ./pop | ||

| + | |||

| + | -------------------- | ||

| + | --- orig/ibm_mpi.gnu 2009-04-15 15:01:58.666457601 -0500 | ||

| + | +++ ztest/ibm_mpi.gnu 2009-04-15 14:17:58.099132435 -0500 | ||

| + | @@ -6,17 +6,18 @@ | ||

| + | # will someday be a file which is a cookbook in Q&A style: "How do I do X?" | ||

| + | # is followed by something like "Go to file Y and add Z to line NNN." | ||

| + | # | ||

| + | -FC = mpxlf90_r | ||

| + | -LD = mpxlf90_r | ||

| + | -CC = mpcc_r | ||

| + | -Cp = /usr/bin/cp | ||

| + | -Cpp = /usr/ccs/lib/cpp -P | ||

| + | +ZPATH=<zepto_dir> | ||

| + | +FC = $(ZPATH)/zmpixlf90 | ||

| + | +LD = $(ZPATH)/zmpixlf90 | ||

| + | +CC = $(ZPATH)/zmpixlc | ||

| + | +Cp = /bin/cp | ||

| + | +Cpp = /usr/bin/cpp -P | ||

| + | AWK = /usr/bin/awk | ||

| + | -ABI = -q64 | ||

| + | +#ABI = -q64 | ||

| + | COMMDIR = mpi | ||

| − | - | + | -NETCDFINC = -I/usr/local/include |

| − | + | -NETCDFLIB = -L/usr/local/lib | |

| − | + | +NETCDFINC = -I/soft/apps/netcdf-4.0/include/ | |

| − | + | +NETCDFLIB = -L/soft/apps/netcdf-4.0/lib | |

| − | + | ||

| − | + | # Enable MPI library for parallel code, yes/no. | |

| − | + | ||

| + | @@ -58,7 +59,8 @@ | ||

| + | # | ||

| + | #---------------------------------------------------------------------------- | ||

| + | |||

| + | -FBASE = $(ABI) -qarch=auto -qnosave -bmaxdata:0x80000000 $(NETCDFINC) -I$(ObjDepDir) | ||

| + | +#FBASE = $(ABI) -qarch=auto -qnosave -bmaxdata:0x80000000 $(NETCDFINC) -I$(ObjDepDir) | ||

| + | +FBASE = $(ABI) -qarch=auto -qnosave $(NETCDFINC) -I$(ObjDepDir) | ||

| + | |||

| + | ifeq ($(TRAP_FPE),yes) | ||

| + | FBASE := $(FBASE) -qflttrap=overflow:zerodivide:enable -qspillsize=32704 | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</pre> | </pre> | ||

| − | + | ===Compiling without the wrapper scripts=== | |

| − | + | If one wishes to invoke the compiler directly, please make sure that the Makefile or build environment points to ZeptoOS header files and libraries correctly. An example would be: | |

| − | |||

| − | that | ||

| − | libraries correctly. An example would be: | ||

<pre> | <pre> | ||

| − | /bgsys/drivers/ppcfloor/gnu-linux/bin/powerpc-bgp-linux-gcc | + | $ /bgsys/drivers/ppcfloor/gnu-linux/bin/powerpc-bgp-linux-gcc \ |

| − | -o mpi-test-linux -Wall -O3 | + | -o mpi-test-linux -Wall -O3 -I<zepto_dir>/include mpi-test.c \ |

| − | - | + | -L<zepto_dir>/lib -lmpich.zcl -ldcmfcoll.zcl -ldcmf.zcl -lSPI.zcl -lzcl \ |

-lzoid_cn -lrt -lpthread -lm | -lzoid_cn -lrt -lpthread -lm | ||

| − | + | $ <zepto_dir>/bin/zelftool -e mpi-test-linux | |

</pre> | </pre> | ||

| − | ''' | + | '''Notes:''' |

| − | * Replace | + | * Replace <tt><zepto_dir></tt> with the ZeptoOS install path. |

| − | * | + | * Do not forget to call the <tt>zelftool</tt> utility, which makes the executable a Zepto Compute Binary. |

| − | + | <!-- | |

| − | |||

| − | |||

| − | |||

The file layout in the zepto install path would be: | The file layout in the zepto install path would be: | ||

| Line 102: | Line 162: | ||

`-- libzoid_cn.a | `-- libzoid_cn.a | ||

</pre> | </pre> | ||

| + | --> | ||

| + | ==Building MPICH, DCMF, and SPI libraries== | ||

| − | + | We provide all the necessary source code to build MPICH, DCMF, and SPI. To build these libraries, just type: | |

| − | |||

| − | We | ||

| − | To build | ||

<pre> | <pre> | ||

| Line 112: | Line 171: | ||

</pre> | </pre> | ||

| − | It may take | + | It may take half an hour to an hour to complete the build process, depending on what file system is being used (i.e., GPFS is a lot slower than a local file system). |

| − | i.e., GPFS is | ||

| − | The rebuild-target target does not know anything about | + | The <tt>rebuild-target</tt> target does not know anything about the existing installation directory; it only copies the built libraries and header files to the <tt>comm/tmp</tt> directory. To install the newly built libraries, do the following: |

| − | do the following | ||

<pre> | <pre> | ||

$ make -C comm update-prebuilt | $ make -C comm update-prebuilt | ||

| − | $ python install.py | + | $ python install.py <zepto_dir> |

| + | </pre> | ||

| + | |||

| + | The <tt>update-prebuilt</tt> target basically copies the files from the <tt>comm/tmp</tt> directory to the <tt>comm/prebuilt</tt> directory, which is where the <tt>install.py</tt> script looks for to copy the files to <tt><zepto_dir></tt>. | ||

| + | |||

| + | ==Software stack layout== | ||

| + | |||

| + | [[Image:Zepto-Comm-Stack.png|right]] | ||

| + | |||

| + | The figure on the right depicts the layout of the communication software stack in the ZeptoOS compute node environment. This is very similar to the IBM's CNK stack, with the exception of an extra ZEPTO SPI layer, and the use of Linux instead of CNK. | ||

| + | |||

| + | Since MPICH is a well-known software package we will not discuss it here, but we will briefly describe the DCMF and SPI components: | ||

| + | |||

| + | * DCMF | ||

| + | ** stands for Deep Computing Messaging Framework, | ||

| + | ** developed by IBM originally for Blue Gene architecture, | ||

| + | ** hardware initialization, query functions, | ||

| + | ** supports BGP Torus DMA, collective network, | ||

| + | ** provides a timer, | ||

| + | ** supports non-blocking collective operations, | ||

| + | ** BGP MPICH uses DCMF internally (IBM provides a glue layer). | ||

| + | * SPI | ||

| + | ** stands for System Programming Interface, | ||

| + | ** developed by IBM; BGP-specific code, | ||

| + | ** kernel interfaces – DMA control, lockbox, etc, | ||

| + | ** DMA-related definitions | ||

| + | *** can be used in both user space and kernel space, | ||

| + | ** RAS, BGP personality, mapping-related functions. | ||

| + | |||

| + | BGP SPI was designed specifically for IBM CNK, so it is not compatible with Linux. ZEPTO SPI is a thin software layer that absorbs the differences between the CNK and Linux or drops the requests that Linux cannot handle. | ||

| + | |||

| + | ==Source code== | ||

| + | |||

| + | The source code and header files of DCMF and SPI can be found in the <tt>comm</tt> directory. The source code of MPICH is in <tt>DCMF/lib/mpich2/mpich2-1.0.7.tar.gz</tt>, which is unpacked at build time. | ||

| + | |||

| + | The DCMF source code is located in <tt>DCMF/sys/</tt>, with the core code in <tt>DCMF/sys/messaging/</tt>. Component Collective Messaging Interface (CCMI) is part of DCMF and its source code is in <tt>DCMF/sys/collectives/</tt>. Test codes can be found in <tt>DCMF/sys/collectives/tests/</tt> for CCMI and <tt>DCMF/sys/messaging/tests/</tt> for the core. Those test codes can be a good example of DCMF/CCMI programming. | ||

| + | |||

| + | SPI headers are in <tt>arch-runtime/arch/</tt> and SPI source code is in <tt>comm/arch-runtime/runtime/</tt>. The source code of the ZEPTO SPI layer is in <tt>arch-runtime/zcl_spi/</tt>, while the header files are in <tt>arch-runtime/arch/include/zepto/</tt>. | ||

| + | |||

| + | Here is an overview of the directory tree: | ||

| + | |||

| + | <pre> | ||

| + | comm | ||

| + | |-- DCMF | ||

| + | | |-- lib | ||

| + | | | |-- dev | ||

| + | | | `-- mpich2 | ||

| + | | | `-- make | ||

| + | | |-- sys | ||

| + | | | |-- collectives | ||

| + | | | | |-- adaptor | ||

| + | | | | |-- kernel | ||

| + | | | | |-- tests | ||

| + | | | | `-- tools | ||

| + | | | |-- include | ||

| + | | | `-- messaging | ||

| + | | | |-- devices | ||

| + | | | |-- messager | ||

| + | | | |-- protocols | ||

| + | | | |-- queueing | ||

| + | | | |-- sysdep | ||

| + | | | `-- tests | ||

| + | |-- arch-runtime | ||

| + | | |-- arch | ||

| + | | | `-- include | ||

| + | | | |-- bpcore | ||

| + | | | |-- cnk | ||

| + | | | |-- common | ||

| + | | | |-- spi | ||

| + | | | `-- zepto | ||

| + | | |-- runtime | ||

| + | | |-- testcodes | ||

| + | | `-- zcl_spi | ||

| + | `-- testcodes | ||

</pre> | </pre> | ||

| − | == | + | ===Debug output=== |

| − | + | ZeptoOS versions of SPI and DCMF have a built-in debug output. The output is disabled by default, and can be enabled by setting the environment variable <tt>ZEPTO_TRACE</tt> when submitting a job. The integer value of the variable indicates the debug level (a higher number results in more debug output). | |

| + | An example: | ||

| + | <pre> | ||

| + | $ cqsub -k <zepto_profile> -n 64 -t 10 ... -e ZEPTO_TRACE=2 ./a.out | ||

| + | </pre> | ||

| − | + | ---- | |

| + | [[Testing]] | [[ZeptoOS_Documentation|Top]] | [[Kernel]] | ||

Latest revision as of 15:44, 8 May 2009

Introduction

To support high performance computing (HPC) applications, specifically MPI applications, we have ported IBM's CNK communication software stack to the ZeptoOS compute node Linux environment. MPICH used in this ZeptoOS release is mpich2-1.0.7 with IBM patches. It is reasonably stable, and the performance of MPI applications on the ZeptoOS compute node Linux is comparable to that on CNK. While there are some limitations at the moment, there are benefits as well.

Benefits:

- No limitation on the number of threads

- 4 or more OpenMP threads per node

- Additional threads as I/O or backgroup tasks

- It is Linux!

- Debugging tools such as gdb, strace, etc

- Various file systems, such as ramfs

Current limitations:

- Only the SMP mode is supported

- Shared libraries are not provided at the moment

- No binary compatibility between CNK and ZeptoOS CN Linux MPI binaries

We will support a VN-equivalent mode (multiple MPI tasks per node) and provide shared libraries in a future release.

As in IBM CNK environment, Deep Computing Messaging Framework (DCMF) and System Programming Interface (SPI) are available. It is possible to write a DCMF code or an SPI code directly if necessary. DCMF is a communication library that provides non-blocking operations. Please refer to the DCMF wiki for details. We are using DCMF version 1.0.0 in the current ZeptoOS release, which is older than the DCMF in the current driver release (V1R3M0). SPI is the lowest-level user-space API for the torus DMA, collective network, BGP-specifc lock mechanisms, and other compute node specific features. There is no public document on SPI available at the moment, but almost all header files and source code are available. Internally, MPICH depends on DMCF, which in turn depends on SPI. We will say more about it later.

ZCB and Big memory

MPI applications running under the ZeptoOS compute node Linux environment (technically, applications that require the DMA operation or a maximum memory bandwidth) need to be configured as Zepto Compute Binaries (ZCB). This is done using the zelftool, which is invoked behind the scenes when linking a binary using the ZeptoOS MPI compiler wrapper scripts (zmpicc, etc).

ZeptoOS compute node kernel treats ZCB executables differently from ordinary processes. It creates a special memory mapping region called big memory, which is covered by large pages with semi-static TLB entries, and it loads all application sections to the big memory region. Big memory region has virtually no TLB misses and it also enables DMA operations.

Some system calls will not work correctly if used from a ZCB process, in particular fork (but creating threads does work). Also, being a separate memory region set up at kernel boot time, the size of big memory is fixed. It is set to 256 MB by default, which could be too small for larger MPI processes; it can be increased before booting a partition, at the expense of the ordinary Linux paged memory.

Compiling HPC applications

While the same compiler can be used as for the applications running under the IBM CNK, ZeptoOS compute node environment requires linking with ZeptoOS-specific communication libraries (applications linked with the CNK MPI will not work on ZeptoOS).

Compiler wrapper scripts

We provide compiler wrapper scripts which automatically link with appropriate libraries from the ZeptoOS installation directory. We provide the same set of wrapper scripts that IBM provides, with an extra z prefix:

- zmpicc, zmpicxx, zmpif77, zmpif90

- Wrapper scripts that invoke BGP-enhanced GNU compilers

- zmpixlc, zmpixlcxx, zmpixlf2003, zmpixlf77, zmpixlf90, zmpixlf95

- Wrapper scripts that invoke IBM XL compilers

- zmpixlc_r, zmpixlcxx_r, zmpixlf2003_r, zmpixlf77_r, zmpixlf90_r, zmpixlf95_r

- Wrapper scripts that invoke IBM XL compilers (thread safe compilation for OpenMP)

To get insight into the internals of these scripts, invoke them with the -show option.

A compilation example

There is nothing special about compiling a program for ZeptoOS. Here is a real-world example of how to build a well-known Parallel Ocean Program (POP).

$ wget http://climate.lanl.gov/Models/POP/POP_2.0.1.tar.Z $ tar xvfz POP_2.0.1.tar.Z && cd pop $ ./setup_run_dir ztest && cd ztest $ edit ibm_mpi.gnu # see the patch below $ export ARCHDIR=ibm_mpi $ make # takes a while $ edit pop_in # test data set - nprocs_clinic = 4 - nprocs_tropic = 4 + nprocs_clinic = 64 + nprocs_tropic = 64 $ cqsub -n 64 -t 10 -k <zepto_profile> ./pop -------------------- --- orig/ibm_mpi.gnu 2009-04-15 15:01:58.666457601 -0500 +++ ztest/ibm_mpi.gnu 2009-04-15 14:17:58.099132435 -0500 @@ -6,17 +6,18 @@ # will someday be a file which is a cookbook in Q&A style: "How do I do X?" # is followed by something like "Go to file Y and add Z to line NNN." # -FC = mpxlf90_r -LD = mpxlf90_r -CC = mpcc_r -Cp = /usr/bin/cp -Cpp = /usr/ccs/lib/cpp -P +ZPATH=<zepto_dir> +FC = $(ZPATH)/zmpixlf90 +LD = $(ZPATH)/zmpixlf90 +CC = $(ZPATH)/zmpixlc +Cp = /bin/cp +Cpp = /usr/bin/cpp -P AWK = /usr/bin/awk -ABI = -q64 +#ABI = -q64 COMMDIR = mpi -NETCDFINC = -I/usr/local/include -NETCDFLIB = -L/usr/local/lib +NETCDFINC = -I/soft/apps/netcdf-4.0/include/ +NETCDFLIB = -L/soft/apps/netcdf-4.0/lib # Enable MPI library for parallel code, yes/no. @@ -58,7 +59,8 @@ # #---------------------------------------------------------------------------- -FBASE = $(ABI) -qarch=auto -qnosave -bmaxdata:0x80000000 $(NETCDFINC) -I$(ObjDepDir) +#FBASE = $(ABI) -qarch=auto -qnosave -bmaxdata:0x80000000 $(NETCDFINC) -I$(ObjDepDir) +FBASE = $(ABI) -qarch=auto -qnosave $(NETCDFINC) -I$(ObjDepDir) ifeq ($(TRAP_FPE),yes) FBASE := $(FBASE) -qflttrap=overflow:zerodivide:enable -qspillsize=32704

Compiling without the wrapper scripts

If one wishes to invoke the compiler directly, please make sure that the Makefile or build environment points to ZeptoOS header files and libraries correctly. An example would be:

$ /bgsys/drivers/ppcfloor/gnu-linux/bin/powerpc-bgp-linux-gcc \ -o mpi-test-linux -Wall -O3 -I<zepto_dir>/include mpi-test.c \ -L<zepto_dir>/lib -lmpich.zcl -ldcmfcoll.zcl -ldcmf.zcl -lSPI.zcl -lzcl \ -lzoid_cn -lrt -lpthread -lm $ <zepto_dir>/bin/zelftool -e mpi-test-linux

Notes:

- Replace <zepto_dir> with the ZeptoOS install path.

- Do not forget to call the zelftool utility, which makes the executable a Zepto Compute Binary.

Building MPICH, DCMF, and SPI libraries

We provide all the necessary source code to build MPICH, DCMF, and SPI. To build these libraries, just type:

$ make -C comm rebuild-target

It may take half an hour to an hour to complete the build process, depending on what file system is being used (i.e., GPFS is a lot slower than a local file system).

The rebuild-target target does not know anything about the existing installation directory; it only copies the built libraries and header files to the comm/tmp directory. To install the newly built libraries, do the following:

$ make -C comm update-prebuilt $ python install.py <zepto_dir>

The update-prebuilt target basically copies the files from the comm/tmp directory to the comm/prebuilt directory, which is where the install.py script looks for to copy the files to <zepto_dir>.

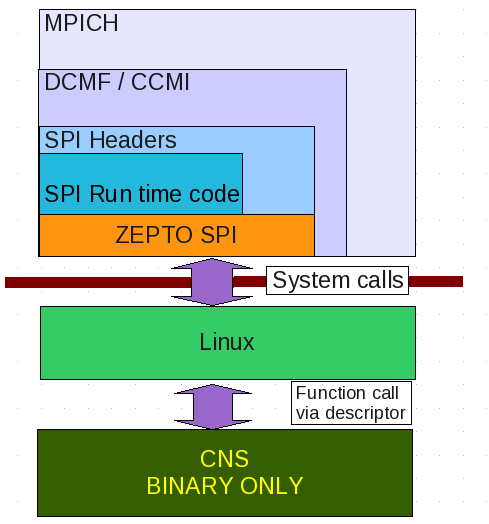

Software stack layout

The figure on the right depicts the layout of the communication software stack in the ZeptoOS compute node environment. This is very similar to the IBM's CNK stack, with the exception of an extra ZEPTO SPI layer, and the use of Linux instead of CNK.

Since MPICH is a well-known software package we will not discuss it here, but we will briefly describe the DCMF and SPI components:

- DCMF

- stands for Deep Computing Messaging Framework,

- developed by IBM originally for Blue Gene architecture,

- hardware initialization, query functions,

- supports BGP Torus DMA, collective network,

- provides a timer,

- supports non-blocking collective operations,

- BGP MPICH uses DCMF internally (IBM provides a glue layer).

- SPI

- stands for System Programming Interface,

- developed by IBM; BGP-specific code,

- kernel interfaces – DMA control, lockbox, etc,

- DMA-related definitions

- can be used in both user space and kernel space,

- RAS, BGP personality, mapping-related functions.

BGP SPI was designed specifically for IBM CNK, so it is not compatible with Linux. ZEPTO SPI is a thin software layer that absorbs the differences between the CNK and Linux or drops the requests that Linux cannot handle.

Source code

The source code and header files of DCMF and SPI can be found in the comm directory. The source code of MPICH is in DCMF/lib/mpich2/mpich2-1.0.7.tar.gz, which is unpacked at build time.

The DCMF source code is located in DCMF/sys/, with the core code in DCMF/sys/messaging/. Component Collective Messaging Interface (CCMI) is part of DCMF and its source code is in DCMF/sys/collectives/. Test codes can be found in DCMF/sys/collectives/tests/ for CCMI and DCMF/sys/messaging/tests/ for the core. Those test codes can be a good example of DCMF/CCMI programming.

SPI headers are in arch-runtime/arch/ and SPI source code is in comm/arch-runtime/runtime/. The source code of the ZEPTO SPI layer is in arch-runtime/zcl_spi/, while the header files are in arch-runtime/arch/include/zepto/.

Here is an overview of the directory tree:

comm |-- DCMF | |-- lib | | |-- dev | | `-- mpich2 | | `-- make | |-- sys | | |-- collectives | | | |-- adaptor | | | |-- kernel | | | |-- tests | | | `-- tools | | |-- include | | `-- messaging | | |-- devices | | |-- messager | | |-- protocols | | |-- queueing | | |-- sysdep | | `-- tests |-- arch-runtime | |-- arch | | `-- include | | |-- bpcore | | |-- cnk | | |-- common | | |-- spi | | `-- zepto | |-- runtime | |-- testcodes | `-- zcl_spi `-- testcodes

Debug output

ZeptoOS versions of SPI and DCMF have a built-in debug output. The output is disabled by default, and can be enabled by setting the environment variable ZEPTO_TRACE when submitting a job. The integer value of the variable indicates the debug level (a higher number results in more debug output).

An example:

$ cqsub -k <zepto_profile> -n 64 -t 10 ... -e ZEPTO_TRACE=2 ./a.out