Difference between revisions of "MPICH, DCMF, and SPI"

| Line 250: | Line 250: | ||

The source codes or header files of MPICH, DCMF and SPI can be found in the comm directory. | The source codes or header files of MPICH, DCMF and SPI can be found in the comm directory. | ||

| − | + | The source code of MPICH is in an archive comm/DCMF/lib/mpich2/mpich2-1.0.7.tar.gz, which will be extracted at the build time. | |

| + | |||

<pre> | <pre> | ||

Revision as of 09:33, 30 April 2009

To support high performance computing(HPC) applications, specifically for MPI applications, we have ported IBM CNK's communication software stack to the Zepto compute node Linux environment. MPICH in the Zepto release is mpich2-1.0.7 with IBM patch. It is reasonably stable and performance of MPI applications on the Zepto compute node Linux is comparable to that's on CNK. While there are some limitations on the porting right now, it has some benefits.

Benefits:

- No limitation on the number of thread

- 4 or more openmp job per node

- Additional thread as I/O or backgroup task

- It's on Linux!

- debugging tools such as gdb, strace, etc

- various file system such as ramfs

Current limitations:

- Only SMP mode is supported

- Shared libraries are not provided now

- No Binary compatibility between CNK and Zepto CN Linux

We will support VN equivalent mode (MPI rank per core) and provide shared libraries in future release.

As in IBM CNK environment, Deep Computing Messaging Framework(DCMF) and System Programming Interface(SPI) are available. You can also write a DCMF code or a SPI code directly if necessary. DCMF is a communication library that provides non-blocking operations. Please refer [DCMF wiki] for details. DCMF in the Zepto release is 1.0.0, which is older than DCMF in the current driver release(V1R3M0). SPI is the lowest level user space API for Torus DMA, collective network, BGP specifc lock mechanisms and other compute node specific implementations. There is no public document available right now but almost all header files and source codes are available. Internally MPICH depends on DMCF that depends on SPI.

ZCB and Big memory

This is not limitation but MPI application on Zepto compute node environment (technically applications that require DMA operation and maximum memory bandwidth) needs to be Zepto Compute Binary(ZCB). ZCB enables 24th bit in the e_flags(processor specific flag) in ELF header. When kernel loads an executable, it examines the bit first. Kernel treats ZCB executable differently than normal processes. Kernel creates a special memory mapping called big memory region which is covered by large pages and semi-statically pinned down, and loads all applications sections to the big memory region. Big memory region has virtually no TLB misses on the big memory region and allows DMA operation since it's offset paged mapping instead of paged memory. Due to big memory, some system calls from ZCB are not usable, such as fork.

Compiling HPC applications

While you can use same compiler to compile your codes, Zepto compute node environment requires linking with zepto modified libraries. ( MPI application's binary for CNK does not work on Zepto environment ).

Compilation wrapper scripts

We provide compilation wrapper scripts (see below) which automatically links with appropriate libraries that are installed in your Zepto installation path. We provide the same set of wrapper scripts that IBM provides. Once you have successfully compiled your code, you need to submit it with Zepto kernel profile ( see the Kernel Profile section). Note: only SMP mode is currently supported.

- Wrapper scripts that invoke BGP enhanced GNU compilers zmpicc zmpicxx zmpif77 zmpif90 - Wrapper scripts that invoke IBM XL compilers zmpixlc zmpixlcxx zmpixlf2003 zmpixlf77 zmpixlf90 zmpixlf95 - Wrapper scripts that invoke IBM XL compilers(thread safe compilation) zmpixlc_r zmpixlcxx_r zmpixlf2003_r zmpixlf77_r zmpixlf90_r zmpixlf95_r

If you need to understand what those script actually do internally, run the wrapper script with the -show option.

A compilation example

Understanding build system on a program might take some time, but there is nothing special to compile a program for Zepto environment.

Here is a real example on how to build a well-known parallel application called Parallel Ocean Program(POP).

$ wget http://climate.lanl.gov/Models/POP/POP_2.0.1.tar.Z $ tar xvfz POP_2.0.1.tar.Z ; cd pop $ ./setup_run_dir ztest ; cd ztest $ edit ibm_mpi.gnu ( see the patch below ) $ export ARCHDIR=ibm_mpi $ make # wait for a while $ edit pop_in # test data set - nprocs_clinic = 4 - nprocs_tropic = 4 + nprocs_clinic = 64 + nprocs_tropic = 64 $ cqsub -n 64 -n 8 -k your_zepto_profile ./pop -------------------- --- orig/ibm_mpi.gnu 2009-04-15 15:01:58.666457601 -0500 +++ ztest/ibm_mpi.gnu 2009-04-15 14:17:58.099132435 -0500 @@ -6,17 +6,18 @@ # will someday be a file which is a cookbook in Q&A style: "How do I do X?" # is followed by something like "Go to file Y and add Z to line NNN." # -FC = mpxlf90_r -LD = mpxlf90_r -CC = mpcc_r -Cp = /usr/bin/cp -Cpp = /usr/ccs/lib/cpp -P +ZPATH=__INST_PREFIX__ +FC = $(ZPATH)/zmpixlf90 +LD = $(ZPATH)/zmpixlf90 +CC = $(ZPATH)/zmpixlc +Cp = //bin/cp +Cpp = /usr/bin/cpp -P AWK = /usr/bin/awk -ABI = -q64 +#ABI = -q64 COMMDIR = mpi -NETCDFINC = -I/usr/local/include -NETCDFLIB = -L/usr/local/lib +NETCDFINC = -I/soft/apps/netcdf-4.0/include/ +NETCDFLIB = -L/soft/apps/netcdf-4.0/lib # Enable MPI library for parallel code, yes/no. @@ -58,7 +59,8 @@ # #---------------------------------------------------------------------------- -FBASE = $(ABI) -qarch=auto -qnosave -bmaxdata:0x80000000 $(NETCDFINC) -I$(ObjDepDir) +#FBASE = $(ABI) -qarch=auto -qnosave -bmaxdata:0x80000000 $(NETCDFINC) -I$(ObjDepDir) +FBASE = $(ABI) -qarch=auto -qnosave $(NETCDFINC) -I$(ObjDepDir) ifeq ($(TRAP_FPE),yes) FBASE := $(FBASE) -qflttrap=overflow:zerodivide:enable -qspillsize=32704

Without compiler scripts

In case you can't use those compilation wrapper scripts, please make sure that your makefile or build environemnt points Zepto header files and libraries correctly. An example would be:

/bgsys/drivers/ppcfloor/gnu-linux/bin/powerpc-bgp-linux-gcc \ -o mpi-test-linux -Wall -O3 -I__INST_PREFIX__/include/ mpi-test.c \ -L__INST_PREFIX__/lib/ -lmpich.zcl -ldcmfcoll.zcl -ldcmf.zcl -lSPI.zcl -lzcl \ -lzoid_cn -lrt -lpthread -lm __INST_PREFIX__/bin/zelftool -e mpi-test-linux

NOTE:

- Replace __INST_PREFIX__ with your actuall Zepto install path

- Don't forget calling the zelftool utility

- which makes your executable a Zepto Compute Binary to let the Zepto kernel load

all application segments into the big memory area.

The file layout in the zepto install path would be:

|-- bin

| |-- zelftool

|-- include

| |-- dcmf.h

| |-- dcmf_collectives.h

| |-- dcmf_coremath.h

| |-- dcmf_globalcollectives.h

| |-- dcmf_multisend.h

| |-- dcmf_optimath.h

| |-- mpe_thread.h

| |-- mpi.h

| |-- mpi.mod

| |-- mpi_base.mod

| |-- mpi_constants.mod

| |-- mpi_sizeofs.mod

| |-- mpicxx.h

| |-- mpif.h

| |-- mpio.h

| |-- mpiof.h

| `-- mpix.h

`-- lib

|-- libSPI.zcl.a

|-- libcxxmpich.zcl.a

|-- libdcmf.zcl.a

|-- libdcmfcoll.zcl.a

|-- libfmpich.zcl.a

|-- libfmpich_.zcl.a

|-- libmpich.zcl.a

|-- libmpich.zclf90.a

|-- libzcl.a

`-- libzoid_cn.a

Building MPICH, DCMF and SPI libraries

We have all necessary source codes to build MPICH, DCMF and SPI. To build those libraries, just type:

$ make -C comm rebuild-target

It may take a half hour to an hour to complete the build process, depending on what file system you are using. i.e., GPFS is definitely slower than local scratch file system.

The rebuild-target target does not know anything about your installation. If you need to apply newly compiled libraries, do the following steps:

$ make -C comm update-prebuilt $ python install.py __INST_PREFIX__

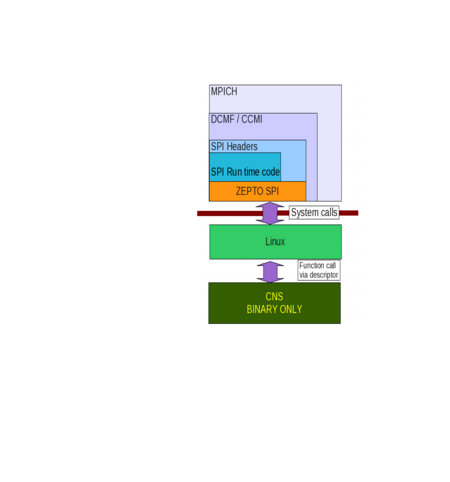

Software stack layout

The right figure depicts the layout of communication software stack for Zepto compute node environment. This is essentially same as in IBM CNK's stack excepts that they have no ZEPTO SPI, and CNK instead of Linux. While we skip the brief explanation of MPICH since it's well-known software piece, we briefly describe what DCMF and SPI are here.

- DCMF

- Stands for Deep Computing Messaging Framework

- Developed by IBM originally for BleuGene architecture

- Hardware Initialization, query functions

- Supports BGP Torus DMA, collective network

- Provides timer

- Supports non-blocking collective operations

- BGP MPICH uses DCMF internally (IBM provides a glue layer)

- SPI

- Stands for System Programming Interface

- Developed by IBM. BGP specific codes.

- Kernel interfaces - DMA control, lockbox, etc

- DMA related definitions

- can be used in both user space and kernel space

- RAS, BGP personality, mapping related functions

BGP SPI is basically designed only for IBM CNK, so SPI is not compatible with Linux. ZEPTO SPI is a thin software layer that absorbs the differences between CNK and Linux, or drops the requests that Linux can not handle.

Source code

The source codes or header files of MPICH, DCMF and SPI can be found in the comm directory. The source code of MPICH is in an archive comm/DCMF/lib/mpich2/mpich2-1.0.7.tar.gz, which will be extracted at the build time.

|-- DCMF | |-- lib | | |-- dev | | `-- mpich2 | | `-- make | |-- sys | | |-- collectives | | |-- include | | |-- messaging |-- arch-runtime | |-- arch | | `-- include | | |-- bpcore | | |-- cnk | | |-- common | | |-- spi | | `-- zepto | |-- runtime | |-- testcodes | `-- zcl_spi `-- testcodes